by Sarah Nichols

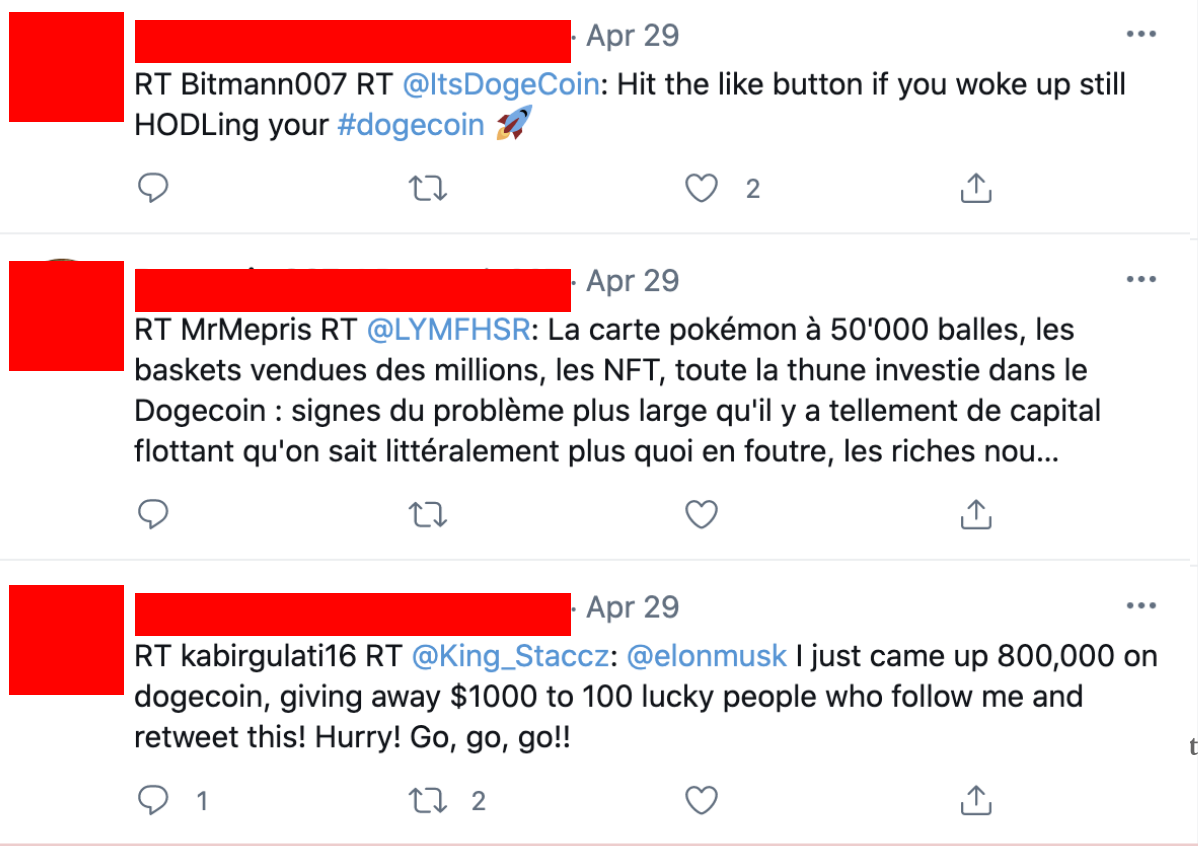

Above: A snapshot of twitter activity related to NFTs from earlier this

year

Although bots take many forms, the goal of this website is to spread awareness about social media bot behavior. When I refer to bots, I am refering to bots that take the form of fraudulent, automated social media accounts.

How do you know that a bot is a bot?

Well, it's complicated- and certainly more art than science. The only real way to know where content is coming from on the internet is to trace some kind of identifying information- a location tag, email address, photo or IP address are all critical pieces of the puzzle. However, bots on social media don’t just hand this information over- and the platform (ie. twitter, facebook, etc) obviously need to keep certain pieces to themselves to protect their regular users. So, this section will cover the basics of OSINT (Open Source INTelligence) and teach you how to use the pieces of information we can obtain in order to identify a bot.

Why is it so hard?

Typical methods of attribution (ie. IP address tracking) aren't available

to the rest of us on social media for security reasons. We don't run the

platform, so we don't get access to that data. We might suspect a group of users is a bot network, but we can’t simply find out which computers are being used and get all of the content in the network. We can only look at the accounts we happen to find, which could get banned or changed depending on how sophisticated the botnet operator is. Technically,

we don't conclusively know where or who a given post is actually coming

from unless the botnet operator decides to make some very bad choices that give us

clues.

So what can we use to give us clues?

Bots tend to have predictable patterns because randomness can be computationally expensive. If you're sending instructions to hundreds of bots, it's much easier for debugging purposes to have them post at a given time on the platform you've managed to automate than have them randomly posted. Attribution by timing is also not alway meaningful, as many people use software to schedule their posts for high traffic timeframes. An account that usually tweets at exactly 9am might just be scheduling some of their posts. It’s also just harder and a hassle to program a bot to tweet from multiple hardware platforms because the bot operator will need to have a slightly different protocol for each one. Automating an iphone is different from automating a laptop, etc. and if the code works on one there’s little incentive to make it work on another. As a result, repeated posts from the same hardware signal (ie, posts made from a phone) at the same time can be an indicator of bot behavior. That said, some people do only tweet from their phone or computer, so platform indicators are not conclusive evidence on their own. It's up to you to discern, based all of the evidence, how likely an account is to be a bot. This image is a simple tweet timetable of a suspected bot account, as you can see it does tweet at very regular times using one hardware platform (blue), but has a variety of irregular tweets from a different hardware platform (yellow). This type of behavior is affectionately coined "cyborg" activity. Basically, the bot is very poor quality, so the bot maker will jump in during the day and tweet, like posts (aka. engage in more "human" behavior) from their computer to keep the bot from being found out. Of course, this takes a lot of time and attention, so sometimes the bot maker gets lazy and we end up with graphs like this one that clearly show a pattern. This data can be found using the twitter API or Allegedly, which is the site that was used here.

Coming up with unique content for a bot to post can be very difficult. It’s difficult for a person to do, and a botnet needs a constant stream of content to cycle through otherwise the code runs the risk of breaking ( think “404 ERROR content not found” through 1000 computers and you get the idea). As a result, most amauture bot makers will just have their bots make passive interactions (liking, reposting a post etc) rather than going through the headache of programming them to post content. Those that do create content usually do so programmatically because of the scale of content required- meaning that they will make "fill in the blank" posts like you see here, or pull from a list of approved, neutral reactions (ie, a heart emoji in response to a given post etc). Because the content repeats itself across the network, usually if you find one target post(ie. a retweeted image), you find other bots within the botnet doing the same thing.With large botnets, it becomes more difficult and taxing code-wise to make every bot in the network behave convincingly and somewhat randomly. It is computationally and labor intensive to make a more convincing bot that does behave somewhat like a person. Think of what you do on social media every day (ie. scroll through your feed, follow others, like posts, retweet, interact with others in comments or have conversations in DMs, etc). Telling a robot to do one of those tasks might work, but all of them together quickly make for some difficult instructions. As a result, The more sophisticated bots usually have a substantial amount of money behind them or are ideologically incentivized.

Many more sophisticated botnets use machine learning generated faces in order to appear more plausibly human and trustworthy. Because these people don't exist, they appear unique and don't appear to be stolen images if you attempt to reverse search the image. However, these accounts are easy to spot if you're aware of certain clues,( i.e. the eyes are always in the same place.) Other clues like weird earrings that don't match, glasses made of aether, and hats that blend into hair are all common glitches you can spot in GAN profile pictures. Image from the Verge Article

Typically, bots are awful at appearing to be human, and as a result few humans will follow or interact with them. This usually causes bot accounts to have high activity and a high number of accounts that they follow-- however very few accounts will follow back or interact. Occaisinally bot accounts in more sophistacated operations will have paid for other bots to follow thier bot accounts in order to make them appear at a glance to be popular, so taking a look at thier followers will reveal a large number of bot accounts. This isn't definitive evidence- plenty of accounts have disproportionate follower/following ratios- but it can be a red flag.

Note: None of these names are "official" in the lexicon of botnet research

All of these bot "types" can have different structures

by Sarah Nichols

Catfish bots exist to act like people. They aren't convincing, but they are there to stop users from getting too lonely and logging off. You'll typically see them in newer MMO games or sites where player satisfaction and investment are vital and they're still building a user base. Basically, they're dumb and not great company but we'd feel lonely without them

Puffins are also social animals and are known to mimic decoys in the wild. Image Source

Most tasks on MMOs or games can be repetitive and grind-heavy, having bots walking around, grinding away makes users want to fit in with the crowd. This type is similar to the Catfish, playing off of human social instincts, but is instead active and/or visible in a community.

You usually see these bots on reddit, they're there to perform a task like reminding you of an event, responding to a prompt or googling a fact. They keep things moving on the platform and help users out with tedious things that would otherwise take them away from the site

A Gif from the Tv show "The Office" demonstrating general chaos.

They exist to cause chaos and are not very good at anything else. They contain spam, broken code, etc. They've always been here and always will be. The platforms know this, can't stop it, and are the embodiment of this gif. No one can stop it. Everything is fine.

These bots mainly exist to increase views of marketing campaigns or misinfo & disinfo campaigns. They gamify site algorithms in order to make them 'work' for a certain price. The goal is to get as many people as possible to see the information they're hired to spread. Essentially they want the content to go viral. They typically use botnets and SEO in a combination of trial and error to help the content gain traction quickly.

A combination of mimic and amplification bots, these bots exist to amplify messages and pose as reasonably human-like, often making real people believe a message is more widely accepted or normalized.

You usually see this with sponsored troll farms (aka. real people paid to tweet content), but it's possible with botnets too.

But what do botnets even look like in the wild? Well, I've collected a list of examples that I've observed over the past year on a page I'm calling "Botnet Taxonomy" below.

It's important to note when reading about botnets that the bot making space is very fluid- platforms and bot populations change constantly- and almost any content you find reporting on bots will have a bias. The research is difficult and subjective without the help of the platforms (twitter, facebook, etc) and we make do with what we can. That said, here are some of the papers and articles I found to be worth mentioning if you're interested in knowing more:

That's all of the shiny content I have for you in this little corner of the internet. I hope you have enjoyed it- stay safe out there.